Streaming road network graphs with contraction hierarchies

Posted 16 Jul 2020

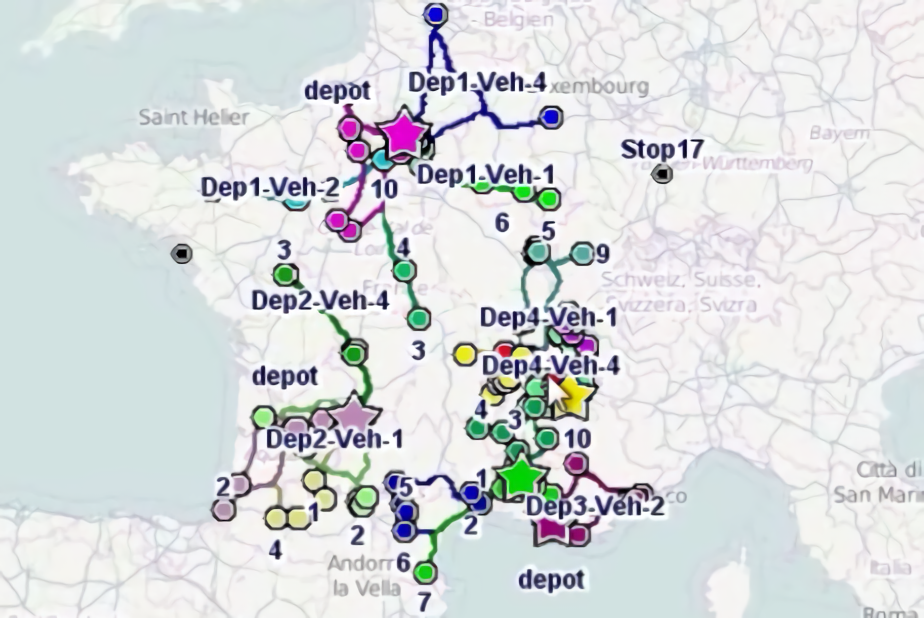

Fast road-network based travel time calculations are essential for realtime vehicle route optimisation, as you need automated driver dispatching to respond rapidly when new jobs are added or drivers get delayed. Similarly, if you want to solve large vehicle routing problems with thousands of stops, you need fast travel time calculations to populate the travel matrix in a reasonable timeframe.

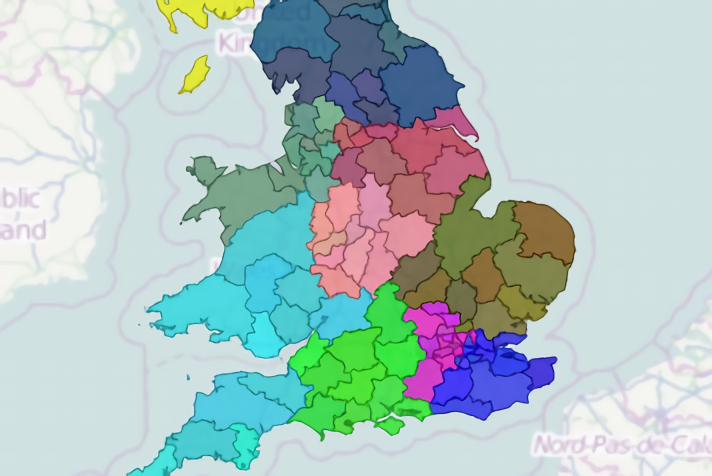

Internally to ODL Live - our realtime routing and scheduling engine - we use the open source Graphhopper library as part of our travel time calculations, combined with our own code for quick travel matrix calculation, traffic estimation and vehicle route optimisation. Travel time calculations use a road network graph – a digital map of the road network that's been pre-processed into 'graph' format. Previously we were using road networks graphs in Graphhopper format, but we've recently developed a new data format for storing road network graph data – ODL Live streaming graphs – which brings some major improvements.

Road network graph data is large, e.g. just France on its own can take up over 2 GB memory. Fast travel calculations need the road network data to be readily available, which ideally means pre-loaded into server memory. As a result, to provide routing services for whole continents or globally, you need servers with 50-100 GB memory. High-memory servers can be costly, and so we developed a new data format for road network graphs, designed for efficient streaming and caching.

The Graphhopper library has two distinct modes for accessing the road network graph data: (a) load all the graph data into memory and (b) memory-mapped file mode. The idea behind a memory mapped file is that the Java runtime executing the Graphhopper code can access the on-disk data files as if they're loaded into server memory, but the whole file doesn't get loaded into memory. Instead the operating system takes care of loading and caching parts of the file on-demand as needed.

So, if Graphhopper already supports loading parts of the graph on-demand, why develop a new streaming graphs format, and is it faster?

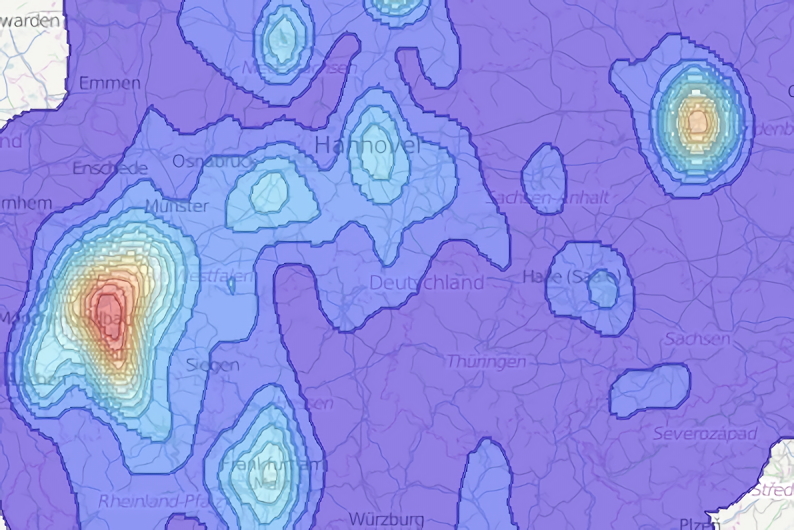

To answer this, we need to understand a little more about the algorithm Graphhopper uses for road network calculations. For decades, road network travel time calculations used Dijkstra's algorithm. Technically Dijkstra's algorithm is a 'shortest-path' algorithm, which means it identifies the shortest-path in a network between two points (where 'shortest' could actually be 'shortest distance' or 'quickest time'). We use the terms 'shortest-path algorithm' and 'travel time calculations' interchangeably; in this context they have effectively the same meaning. Dijkstra's algorithm requires parsing more-or-less the entire graph twice for each location, when calculating an n-squared distance matrix (i.e. all-locations to all-locations). In contrast, Graphhopper and several other open source shortest-path calculation engines (e.g. OSRM) use contraction hierarchies, which is a relatively new shortest-path algorithm. Contraction hierarchies speed up shortest-path calculations massively by identifying shortcuts in the graph. Think about driving across your country, you would use the motorways or highways, not the slow back roads. Contraction hierarchies exploits this fact by filtering out large parts of the graph which you would never use.

In ODL Live streaming graphs, we still use Graphhopper to build the initial road network graph with contraction hierarchies. However, we then implement an extra pre-processing phase which splits the data up into carefully designed chunks. Our new algorithm decides what data to chunk together based on minimising the number of chunk reads when a shortest-path calculation is performed. As we use contraction hierarchies, we only need to read a subset of the graph data to perform the shortest path calculation. The most important shortcuts in the contraction hierarchy graph (typically corresponding to motorways and highways), will be used in many different shortest-path calculations, to and from widely separated positions. Our data chunking algorithm takes account of this, ensuring that the most important shortcuts can be easily cached when the algorithm runs. We also developed a secondary chunking algorithm so we could quickly find the closest edge to a latitude-longitude point, before doing the shortest path query from the point.

The ODL Live streaming graphs file format is therefore built from the ground-up for performing fast contraction-hierarchy based travel time calculations, whilst only needing to load a small fraction of the graph data. This lets us perform road network travel time calculations on servers with limited memory.

So how does ODL Live streaming graphs compare to Graphhopper's existing modes, when server memory is limited?

We tested on a 1 GB RAM virtual Ubuntu server backed by a consumer-grade SSD. The operating system itself took around 500 MB memory, leaving around 500 MB for loading road network data and executing travel time calculations. We tested against a road network graph of France containing several speed profiles, which took up 2.1 GB. With this limited memory setup, the maximum possible server memory for travel time calculations was around a quarter of the total graph data (less if we consider the memory required to load the Java runtime).

We used the same Java code to generate travel time matrices between random points in France, but loading the data using (a) ODL Live's streaming graphs and then (b) Graphhopper's memory-mapped file mode. We excluded Graphhopper's 'load all' mode from the limited memory tests after verifying (as expected) it could not run without sufficient server memory available.

For the ODL Live streaming graph test, we gave Java 450 MB memory, of which 350 MB was reserved for our LRU chunk cache (i.e. we could hold a maximum of around 16% of the graph in-memory at once). For the Graphhopper memory-mapped mode, as memory-mapped files can use the remainder of the operating system memory not used by Java, it was unclear how much memory to give Java. We therefore repeated the Graphhopper memory-mapped test three times, giving Java 50 MB, 250 MB and 450 MB respectively.

Finally, we compared the limited memory test results to the unlimited memory case, where the virtual server was given ample memory and the travel time matrix generation code used Graphhopper's 'load all data' mode (i.e. the best possible performance case).

With limited memory, we found that our streaming graph calculations were on-average only 2.1 times slower than the unlimited memory case. In-contrast, Graphhopper's memory-mapped mode was around 50 times slower than the unlimited memory case.

Why is ODL Live streaming graph much faster than Graphhopper memory-mapped mode, if both modes stream and cache the data?

This is dependent on how the Linux operating system (and potentially the virtual machine's hypervisor), load and cache data for memory-mapped files. Our best guess is that it comes down to data locality though; ODL Live's streaming graph data chunking algorithm is designed to keep data together (in the same chunks) that will be needed together in the calculations, and to cache the most important data used across geographically-separated shortest-path queries. As a result, with even a relatively small amount of memory available to cache road network data, a single shortest-path query only needs to do a couple of small reads from disk.

For us, this was a great result, with streaming graphs we could get the same ballpark performance (within a factor of 2) whilst using only a fraction of the server memory. Moreover, when the server had ample memory, our streaming graphs ran around the same speed as fully loaded graphs (because the streaming graph eventually gets completely loaded into the chunk cache). Streaming graphs are now available in our latest ODL Live release.